其實不想用這個題目的,只因為TCP相關的東西比較吸引人的眼球,這篇文章的主題還是eBPF,而不是TCP。

用eBPF寫TCP擁塞控制算法只是本文所講內(nèi)容的一個再平凡不過的例子。

先看兩個問題,或者說是兩個痛點:

內(nèi)核越來越策略化。

內(nèi)核接口不穩(wěn)定。

分別簡單說一下。

所謂內(nèi)核策略化就是說越來越多的靈巧的算法,小tricks等靈活多變的代碼進入內(nèi)核,舉例來講,包括但不限于以下這些:

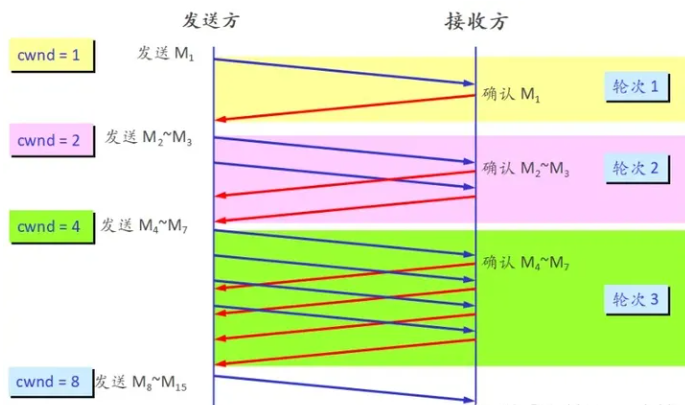

TCP擁塞控制算法。

TC排隊規(guī)則,數(shù)據(jù)包調(diào)度算法。

各種查找的哈希算法。

…

這部分策略化的代碼幾乎都是用“回調(diào)函數(shù)”實現(xiàn)的,這在另一方面烘托了Linux內(nèi)核也是模塊化設計的,且機制和策略分離,當需要一種新的算法時,只需要register一組新的回調(diào)函數(shù)即可。

然而,…

然而不夠完美,因為上述第2點,“內(nèi)核接口不穩(wěn)定”!即每一個內(nèi)核版本的數(shù)據(jù)結(jié)構(gòu)以及API都是不兼容的。

這意味著什么?

這意味著,即便是高度封裝好的算法模塊代碼,也需要為不同版本的Linux內(nèi)核維護一套代碼,當涉及內(nèi)核模塊由于版本問題不得不升級時,數(shù)據(jù)結(jié)構(gòu)和api的適配工作往往是耗時且出力不討好的。

但其實,很多算法根本就是與內(nèi)核數(shù)據(jù)結(jié)構(gòu),內(nèi)核api這些無關的。

兩個內(nèi)核版本,數(shù)據(jù)結(jié)構(gòu)只是字段變化了位置,新增了字段,更新了字段名字,即便如此,不得不對算法模塊進行重新編譯…

如果能在模塊載入內(nèi)核的時候,對函數(shù)和數(shù)據(jù)結(jié)構(gòu)字段進行重定位就好了!

我們的目標是,一次編寫,多次運行。

又是Facebook走在了前面,來自Facebook的BPF CO-RE(Compile Once – Run Everywhere):

http://vger.kernel.org/bpfconf2019_talks/bpf-core.pdf

沒錯,eBPF,就是它!

我們看下其描述:

BPF CO-RE talk discussed issues that developers currently run into when developing, testing, deploying, and running BPF applications at scale, taking Facebook’s experience as an example. Today, most types of BPF programs access internal kernel structures, which necessitates the need to compile BPF program’s C code “on the fly” on every single production machine due to changing struct/union layouts and definitions inside kernel. This causes many problems and inconveniences, starting from the need to have kernel sources available everywhere and in sync with running kernel, which is a hassle to set up and maintain. Reliance on embedded LLVM/Clang for compilation means big application binary size, increased memory usage, and some rare, but impactful production issues due to increased resource usage due to compilation. With current approach testing BPF programs against multitude of production kernels is a stressful, time-consuming, and error-prone process. The goal of BPF CO-RE is to solve all of those issues and move BPF app development flow closer to typical experience, one would expect when developing applications: compile BPF code once and distribute it as a binary. Having a good way to validate that BPF application will run without issues on all active kernels is also a must.

The complexity hides in the need to adjust compiled BPF assembly code to every specific kernel in production, as memory layout of kernel data structures changes between kernel versions and even different kernel build configurations. BPF CO-RE solution relies on self-describing kernel providing BTF type information and layout (ability to produce it was recently committed upstream). With the help from Clang compiler emitting special relocations during BPF compilation and with libbpf as a dynamic loader, it’s possible to reconciliate correct field offsets just before loading BPF program into kernel. As BPF programs are often required to work without modification (i.e., re-compilation) on multiple kernel versions/configurations with incompatible internal changes, there is a way to specify conditional BPF logic based on actual kernel version and configuration, also using relocations emitted from Clang. Not having to rely on kernel headers significantly improves the testing story and makes it possible to have a good tooling support to do pre-validation before deploying to production.

There are still issues which will have to be worked around for now. There is currently no good way to extract #define macro from kernel, so this has to be dealt with by copy/pasting the necessary definitions manually. Code directly relying on size of structs/unions has to be avoided as well, as it isn’t relocatable in general case. While there are some raw ideas how to solve issues like that in the future, BPF CO-RE developers prioritize providing basic mechanisms to allow “Compile Once - Run Everywhere” approach and significantly improve testing and pre-validation experience through better tooling, enabled by BPF CO-RE. As existing applications are adapted to BPF CO-RE, there will be new learning and better understanding of additional facilities that need to be provided to provide best developer experience possible.

該機制可以:

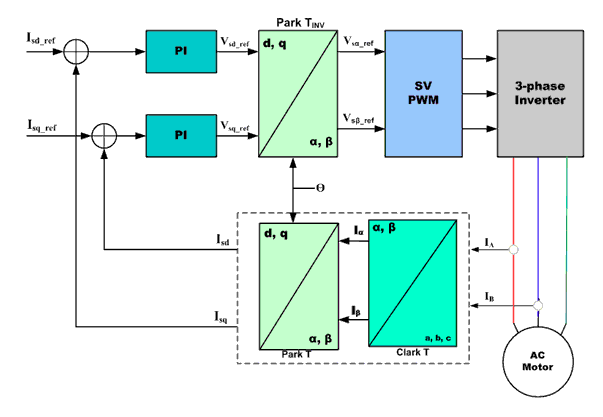

用eBPF的一組字節(jié)碼實現(xiàn)內(nèi)核模塊的一組回調(diào)函數(shù)。

對使用到的內(nèi)核數(shù)據(jù)結(jié)構(gòu)字段進行重定位,適配當前內(nèi)核的對應偏移。

后果就是:

很多內(nèi)核算法模塊可以用eBPF來編寫了。

Linux 5.6用TCP擁塞控制算法舉了一例,我們看一下:

https://git.kernel.org/pub/scm/linux/kernel/git/torvalds/linux.git/commit/?id=09903869f69f

可以看到,這個eBPF程序是與內(nèi)核版本無關的,你可以看到它的tcp_sock結(jié)構(gòu)體的定義:

struct tcp_sock { struct inet_connection_sock inet_conn; __u32 rcv_nxt; __u32 snd_nxt; __u32 snd_una; __u8 ecn_flags; __u32 delivered; __u32 delivered_ce; __u32 snd_cwnd; __u32 snd_cwnd_cnt; __u32 snd_cwnd_clamp; __u32 snd_ssthresh; __u8 syn_data:1, /* SYN includes data */ syn_fastopen:1, /* SYN includes Fast Open option */ syn_fastopen_exp:1,/* SYN includes Fast Open exp. option */ syn_fastopen_ch:1, /* Active TFO re-enabling probe */ syn_data_acked:1,/* data in SYN is acked by SYN-ACK */ save_syn:1, /* Save headers of SYN packet */ is_cwnd_limited:1,/* forward progress limited by snd_cwnd? */ syn_smc:1; /* SYN includes SMC */ __u32 max_packets_out; __u32 lsndtime; __u32 prior_cwnd;} __attribute__((preserve_access_index));

這里注意到兩點:

該結(jié)構(gòu)體并非內(nèi)核頭文件里的對應結(jié)構(gòu)體,它只包含了內(nèi)核對應結(jié)構(gòu)體里TCP CC算法用到的字段,它是內(nèi)核對應同名結(jié)構(gòu)體的子集。

preserve_access_index屬性表示eBPF字節(jié)碼在載入的時候,會對這個結(jié)構(gòu)體里的字段進行重定向,滿足當前內(nèi)核版本的同名結(jié)構(gòu)體字段的偏移。

我們在看下eBPF實現(xiàn)的TCP CC回調(diào)函數(shù)是個什么樣子:

BPF_TCP_OPS_3(tcp_reno_cong_avoid, void, struct sock *, sk, __u32, ack, __u32, acked){ struct tcp_sock *tp = tcp_sk(sk); if (!tcp_is_cwnd_limited(sk)) return; /* In "safe" area, increase. */ if (tcp_in_slow_start(tp)) { acked = tcp_slow_start(tp, acked); if (!acked) return; } /* In dangerous area, increase slowly. */ tcp_cong_avoid_ai(tp, tp->snd_cwnd, acked);}... SEC(".struct_ops")struct tcp_congestion_ops dctcp = { .init = (void *)dctcp_init, .in_ack_event = (void *)dctcp_update_alpha, .cwnd_event = (void *)dctcp_cwnd_event, .ssthresh = (void *)dctcp_ssthresh, .cong_avoid = (void *)tcp_reno_cong_avoid, .undo_cwnd = (void *)dctcp_cwnd_undo, .set_state = (void *)dctcp_state, .flags = TCP_CONG_NEEDS_ECN, .name = "bpf_dctcp",};

沒啥特殊的,幾乎和內(nèi)核模塊的寫法一樣,唯一不同的是:

它和內(nèi)核版本無關了。你用llvm/clang編譯出來.o字節(jié)碼將可以被載入到所有的內(nèi)核。

它讓人感覺這是在用戶態(tài)編程。

是的,這就是在用戶態(tài)寫的TCP CC算法,eBPF字節(jié)碼的對應verifier會對你的代碼進行校驗,它不允許可以crash內(nèi)核的eBPF代碼載入,你的危險代碼幾乎無法通過verify。

如果你想搞明白這一切背后是怎么做到的,看兩個文件就夠了:

net/ipv4/bpf_tcp_ca.c

kernel/bpf/bpf_struct_ops.c

當然,經(jīng)理不會知道這意味著什么。

浙江溫州皮鞋濕,下雨進水不會胖。

原文標題:用eBPF寫TCP擁塞控制算法

文章出處:【微信公眾號:Linuxer】歡迎添加關注!文章轉(zhuǎn)載請注明出處。

責任編輯:haq

-

內(nèi)核

+關注

關注

3文章

1372瀏覽量

40282 -

TCP

+關注

關注

8文章

1353瀏覽量

79056

原文標題:用eBPF寫TCP擁塞控制算法

文章出處:【微信號:LinuxDev,微信公眾號:Linux閱碼場】歡迎添加關注!文章轉(zhuǎn)載請注明出處。

發(fā)布評論請先 登錄

相關推薦

TCP協(xié)議是什么

如何用Jacinto內(nèi)部的GPtimer輸出PWM信號控制屏幕背光

EtherCAT主站ModBus TCP協(xié)議網(wǎng)關(YC-ECTM-TCP)

tcp和udp的區(qū)別和聯(lián)系

神經(jīng)網(wǎng)絡如何用無監(jiān)督算法訓練

論TCP協(xié)議中的擁塞控制機制與網(wǎng)絡穩(wěn)定性

eBPF動手實踐系列三:基于原生libbpf庫的eBPF編程改進方案簡析

基于原生libbpf庫的eBPF編程改進方案

以太網(wǎng)存儲網(wǎng)絡的擁塞管理連載案例(五)

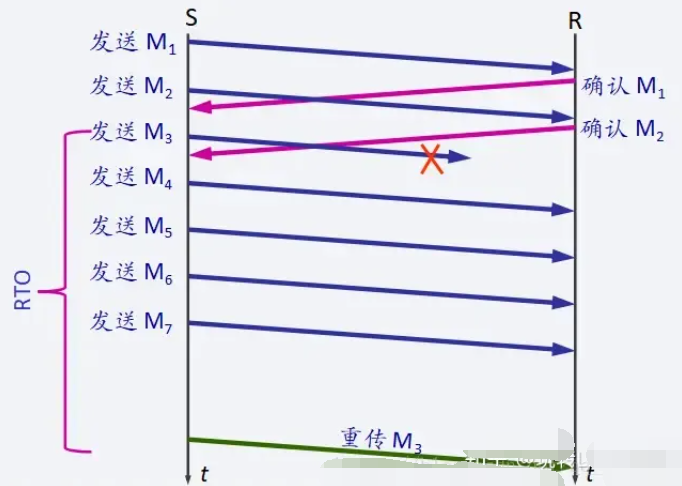

TCP協(xié)議技術之自適應重傳

一文詳解DCQCN擁塞控制算法

請問TCP擁塞控制對數(shù)據(jù)延遲有何影響?

SIMATIC S7-1500 Modbus TCP通訊

如何用eBPF寫TCP擁塞控制算法?

如何用eBPF寫TCP擁塞控制算法?

評論