一、首先說明幾個情況:

1、完成雙目標定必須是自個拿棋盤圖擺拍,網上涉及用opencv自帶的標定圖完成雙目標定僅僅是提供個參考流程。我原來還以為用自帶的圖標定就行,但想不通的是咱們實際擺放的雙目攝像頭和人家當時擺放的肯定不一樣,那用人家的標定圖怎么能反應自己攝像頭的實際情況;后來問了大神,才知道用opencv自帶的標定圖(或者說別人提供的圖)進行標定,這是完全沒有意義的。

2、進行雙目標定必須是左右相機同時進行拍攝,再把圖保存下來。這點我是看opencv自帶的圖發現的,左右相機對應的圖擺拍的姿勢是一模一樣的,除了左右相機視角帶來的影響。

3、我是先完成單目標定,再完成雙目標定。記得把理論看看,要不然有些概念不清楚。

二、

1、先固定好左右相機,拿棋盤標定圖擺拍并保存,我左右相機各15張,網上看的說是總共30~40張為宜,這個大家隨意。

棋盤標定圖在我另發的一個博客里免費下載,當時找了好久,下載的、程序生成的都或多或少有那么一些問題。知識是得有價,但棋盤圖這種最最基本的工具,博客里基本都需要拿幣下載,真是服。

程序:

// 同時調用兩個攝像頭,暫停并保存左右相機的標定棋盤圖

#include

#include

#include

using namespace cv;

using namespace std; //開頭我是從教程學的,一般不變,直接用

int main(int argc, char* argv[])

{

VideoCapture cap(0);

VideoCapture cap1(1); //打開兩個攝像頭

if(!cap.isOpened())

{

printf("Could not open camera0... ");

return -1;

}

if (!cap1.isOpened())

{

printf("Could not open camera1... ");

return -2;

} //判斷還是加上為好,便于調程序

Mat frame, frame1;

bool stop = false;

while (!stop)

{

cap.read(frame);

cap1.read(frame1);

imshow("camera0", frame);

imshow("camera1", frame1);

int delay = 30;

if (delay >= 0 && waitKey(delay) >= 0)

{

waitKey(0); //實時拍攝暫停的程序

}

imwrite("C:/Users/Administrator/Desktop/11/left1.jpg", frame1);

imwrite("C:/Users/Administrator/Desktop/11/right1.jpg", frame); //給個位置保存圖片,注意圖片到底是左相機還是右相機的(用手在攝像頭前晃晃),我用的笨方法,保存一下,再把(left1.jpg/right1.jpg)+1,接著保存

}

cap.release();

cap1.release(); //兩個攝像頭數據釋放

return 0;

}

2、完成單目標定,程序我是借鑒網上的,修改的不多。

//左右單目相機分別標定

#include "opencv2/core/core.hpp"

#include "opencv2/imgproc/imgproc.hpp"

#include "opencv2/calib3d/calib3d.hpp"

#include "opencv2/highgui/highgui.hpp"

#include

#include "cv.h"

#include

#include

using namespace std;

using namespace cv; //人家這開頭都長,遇到有紅線標記的就刪了,把你知道的開頭加上,沒問題

const int boardWidth = 9; //橫向的角點數目

const int boardHeight = 6; //縱向的角點數據

const int boardCorner = boardWidth * boardHeight; //總的角點數據

const int frameNumber = 15; //相機標定時需要采用的圖像幀數

const int squareSize = 25; //標定板黑白格子的大小 單位mm

const Size boardSize = Size(boardWidth, boardHeight); //總的內角點

Mat intrinsic; //相機內參數

Mat distortion_coeff; //相機畸變參數

vectorrvecs; //旋轉向量

vectortvecs; //平移向量

vector

vector

vectorcorner; //某一副圖像找到的角點

/*計算標定板上模塊的實際物理坐標*/

void calRealPoint(vector

{

vectorimgpoint;

for (int rowIndex = 0; rowIndex < boardheight; rowIndex++)

{

for (int colIndex = 0; colIndex < boardwidth; colIndex++)

{

imgpoint.push_back(Point3f(rowIndex * squaresize, colIndex * squaresize, 0));

}

}

for (int imgIndex = 0; imgIndex < imgNumber; imgIndex++)

{

obj.push_back(imgpoint);

}

}

/*設置相機的初始參數 也可以不估計*/

void guessCameraParam(void)

{

/*分配內存*/

intrinsic.create(3, 3, CV_64FC1); //相機內參數

distortion_coeff.create(5, 1, CV_64FC1); //畸變參數

/*

fx 0 cx

0 fy cy

0 0 1 內參數

*/

intrinsic.at(0, 0) = 256.8093262; //fx

intrinsic.at(0, 2) = 160.2826538; //cx

intrinsic.at(1, 1) = 254.7511139; //fy

intrinsic.at(1, 2) = 127.6264572; //cy

intrinsic.at(0, 1) = 0;

intrinsic.at(1, 0) = 0;

intrinsic.at(2, 0) = 0;

intrinsic.at(2, 1) = 0;

intrinsic.at(2, 2) = 1;

/*

k1 k2 p1 p2 p3 畸變參數

*/

distortion_coeff.at(0, 0) = -0.193740; //k1

distortion_coeff.at(1, 0) = -0.378588; //k2

distortion_coeff.at(2, 0) = 0.028980; //p1

distortion_coeff.at(3, 0) = 0.008136; //p2

distortion_coeff.at(4, 0) = 0; //p3

}

void outputCameraParam(void)

{

/*保存數據*/

//cvSave("cameraMatrix.xml", &intrinsic);

//cvSave("cameraDistoration.xml", &distortion_coeff);

//cvSave("rotatoVector.xml", &rvecs);

//cvSave("translationVector.xml", &tvecs);

/*輸出數據*/

//cout << "fx :" << intrinsic.at(0, 0) << endl << "fy :" << intrinsic.at(1, 1) << endl;

//cout << "cx :" << intrinsic.at(0, 2) << endl << "cy :" << intrinsic.at(1, 2) << endl;//內參數

printf("fx:%lf... ", intrinsic.at(0, 0));

printf("fy:%lf... ", intrinsic.at(1, 1));

printf("cx:%lf... ", intrinsic.at(0, 2));

printf("cy:%lf... ", intrinsic.at(1, 2)); //我學的是printf,就試著改了一下,都能用

//cout << "k1 :" << distortion_coeff.at(0, 0) << endl;

//cout << "k2 :" << distortion_coeff.at(1, 0) << endl;

//cout << "p1 :" << distortion_coeff.at(2, 0) << endl;

//cout << "p2 :" << distortion_coeff.at(3, 0) << endl;

//cout << "p3 :" << distortion_coeff.at(4, 0) << endl; ? //畸變參數

printf("k1:%lf... ", distortion_coeff.at(0, 0));

printf("k2:%lf... ", distortion_coeff.at(1, 0));

printf("p1:%lf... ", distortion_coeff.at(2, 0));

printf("p2:%lf... ", distortion_coeff.at(3, 0));

printf("p3:%lf... ", distortion_coeff.at(4, 0));

}

int main()

{

int imageHeight; //圖像高度

int imageWidth; //圖像寬度

int goodFrameCount = 0; //有效圖像的數目

Mat rgbImage,grayImage;

Mat tImage = imread("C:/Users/Administrator/Desktop/11/right1.jpg");

if (tImage.empty())

{

printf("Could not load tImage... ");

return -1;

}

imageHeight = tImage.rows;

imageWidth = tImage.cols;

grayImage = Mat::ones(tImage.size(), CV_8UC1);

while (goodFrameCount < frameNumber)

{

char filename[100];

sprintf_s(filename, "C:/Users/Administrator/Desktop/11/right%d.jpg", goodFrameCount + 1);

rgbImage = imread(filename);

if (rgbImage.empty())

{

printf("Could not load grayImage... ");

return -2;

}

cvtColor(rgbImage, grayImage, CV_BGR2GRAY);

imshow("Camera", grayImage);

bool isFind = findChessboardCorners(rgbImage, boardSize, corner, CALIB_CB_ADAPTIVE_THRESH + CALIB_CB_NORMALIZE_IMAGE);

//bool isFind = findChessboardCorners( rgbImage, boardSize, corner,

//CV_CALIB_CB_ADAPTIVE_THRESH | CV_CALIB_CB_FAST_CHECK | CV_CALIB_CB_NORMALIZE_IMAGE);

if (isFind == true) //所有角點都被找到 說明這幅圖像是可行的

{

//精確角點位置,亞像素角點檢測

cornerSubPix(grayImage, corner, Size(5, 5), Size(-1, -1), TermCriteria(CV_TERMCRIT_EPS | CV_TERMCRIT_ITER, 20, 0.1));

//繪制角點

drawChessboardCorners(rgbImage, boardSize, corner, isFind);

imshow("chessboard", rgbImage);

corners.push_back(corner);

goodFrameCount++;

/*cout << "The image" << goodFrameCount << " is good" << endl;*/

printf("The image %d is good... ", goodFrameCount);

}

else

{

printf("The image is bad please try again... ");

}

if (waitKey(10) == 'q')

{

break;

}

}

/*

圖像采集完畢 接下來開始攝像頭的校正

calibrateCamera()

輸入參數 objectPoints 角點的實際物理坐標

imagePoints 角點的圖像坐標

imageSize 圖像的大小

輸出參數

cameraMatrix 相機的內參矩陣

distCoeffs 相機的畸變參數

rvecs 旋轉矢量(外參數)

tvecs 平移矢量(外參數)

*/

/*設置實際初始參數 根據calibrateCamera來 如果flag = 0 也可以不進行設置*/

guessCameraParam();

printf("guess successful... ");

/*計算實際的校正點的三維坐標*/

calRealPoint(objRealPoint, boardWidth, boardHeight, frameNumber, squareSize);

printf("calculate real successful... ");

/*標定攝像頭*/

calibrateCamera(objRealPoint, corners, Size(imageWidth, imageHeight), intrinsic, distortion_coeff, rvecs, tvecs, 0);

printf("calibration successful... ");

/*保存并輸出參數*/

outputCameraParam();

printf("output successful... ");

/*顯示畸變校正效果*/

Mat cImage;

undistort(rgbImage, cImage, intrinsic, distortion_coeff); //矯正相機鏡頭變形

imshow("Corret Image", cImage);

printf("Corret Image.... ");

printf("Wait for Key.... ");

waitKey(0);

return 0;

}

注意:(1)把你得到的左右相機標定完的參數記下來,雙目標定的時候會用;

(2)如果遇到cvtColor出問題,那就查一下構造一個新圖像的Mat類使用方法;

(3)如果遇到結果一閃而過的問題,可以先用網上提供的兩種方法試一下(一搜就有),但是我用這兩種方法都沒解決,最后才知道是frameNumber 的問題,我設的值比現有的圖多一張。到時候具體問題具體分析吧,都是應該經歷的過程。

3、完成雙目標定,程序也是借鑒網上的,稍加修改。

// 雙目相機標定

#include "opencv2/core/core.hpp"

#include "opencv2/imgproc/imgproc.hpp"

#include "opencv2/calib3d/calib3d.hpp"

#include "opencv2/highgui/highgui.hpp"

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include "cv.h"

#include

using namespace std;

using namespace cv; //依舊很長的開頭

const int imageWidth = 640; //攝像頭的分辨率

const int imageHeight = 480;

const int boardWidth = 9; //橫向的角點數目

const int boardHeight = 6; //縱向的角點數據

const int boardCorner = boardWidth * boardHeight; //總的角點數據

const int frameNumber = 15; //相機標定時需要采用的圖像幀數

const int squareSize = 25; //標定板黑白格子的大小 單位mm

const Size boardSize = Size(boardWidth, boardHeight); //標定板的總內角點

Size imageSize = Size(imageWidth, imageHeight);

Mat R, T, E, F; //R 旋轉矢量 T平移矢量 E本征矩陣 F基礎矩陣

vectorrvecs; //旋轉向量

vectortvecs; //平移向量

vector

vector

vector

vectorcornerL; //左邊攝像機某一照片角點坐標集合

vectorcornerR; //右邊攝像機某一照片角點坐標集合

Mat rgbImageL, grayImageL;

Mat rgbImageR, grayImageR;

Mat Rl, Rr, Pl, Pr, Q; //校正旋轉矩陣R,投影矩陣P 重投影矩陣Q (下面有具體的含義解釋)

Mat mapLx, mapLy, mapRx, mapRy; //映射表

Rect validROIL, validROIR; //圖像校正之后,會對圖像進行裁剪,這里的validROI就是指裁剪之后的區域

/*

事先標定好的左相機的內參矩陣

fx 0 cx

0 fy cy

0 0 1

*/

Mat cameraMatrixL = (Mat_(3, 3) << 462.279595, 0, 312.781587,

0, 460.220741, 208.225803,

0, 0, 1); //這時候就需要你把左右相機單目標定的參數給寫上

//獲得的畸變參數

Mat distCoeffL = (Mat_(5, 1) << -0.054929, 0.224509, 0.000386, 0.001799, -0.302288);

/*

事先標定好的右相機的內參矩陣

fx 0 cx

0 fy cy

0 0 1

*/

Mat cameraMatrixR = (Mat_(3, 3) << 463.923124, 0, 322.783959,

0, 462.203276, 256.100655,

0, 0, 1);

Mat distCoeffR = (Mat_(5, 1) << -0.049056, 0.229945, 0.001745, -0.001862, -0.321533);

/*計算標定板上模塊的實際物理坐標*/

void calRealPoint(vector

{

vectorimgpoint;

for (int rowIndex = 0; rowIndex < boardheight; rowIndex++)

{

for (int colIndex = 0; colIndex < boardwidth; colIndex++)

{

imgpoint.push_back(Point3f(rowIndex * squaresize, colIndex * squaresize, 0));

}

}

for (int imgIndex = 0; imgIndex < imgNumber; imgIndex++)

{

obj.push_back(imgpoint);

}

}

void outputCameraParam(void)

{

/*保存數據*/

/*輸出數據*/

FileStorage fs("intrinsics.yml", FileStorage::WRITE); //文件存儲器的初始化

if (fs.isOpened())

{

fs << "cameraMatrixL" << cameraMatrixL << "cameraDistcoeffL" << distCoeffL << "cameraMatrixR" << cameraMatrixR << "cameraDistcoeffR" << distCoeffR;

fs.release();

cout << "cameraMatrixL=:" << cameraMatrixL << endl << "cameraDistcoeffL=:" << distCoeffL << endl << "cameraMatrixR=:" << cameraMatrixR << endl << "cameraDistcoeffR=:" << distCoeffR << endl;

}

else

{

cout << "Error: can not save the intrinsics!!!!!" << endl;

}

fs.open("extrinsics.yml", FileStorage::WRITE);

if (fs.isOpened())

{

fs << "R" << R << "T" << T << "Rl" << Rl << "Rr" << Rr << "Pl" << Pl << "Pr" << Pr << "Q" << Q;

cout << "R=" << R << endl << "T=" << T << endl << "Rl=" << Rl << endl << "Rr=" << Rr << endl << "Pl=" << Pl << endl << "Pr=" << Pr << endl << "Q=" << Q << endl;

fs.release();

}

else

cout << "Error: can not save the extrinsic parameters ";

}

int main(int argc, char* argv[])

{

Mat img;

int goodFrameCount = 0;

while (goodFrameCount < frameNumber)

{

char filename[100];

/*讀取左邊的圖像*/

sprintf_s(filename, "C:/Users/Administrator/Desktop/11/left%d.jpg", goodFrameCount + 1);

rgbImageL = imread(filename, CV_LOAD_IMAGE_COLOR);

cvtColor(rgbImageL, grayImageL, CV_BGR2GRAY);

/*讀取右邊的圖像*/

sprintf_s(filename, "C:/Users/Administrator/Desktop/11/right%d.jpg", goodFrameCount + 1);

rgbImageR = imread(filename, CV_LOAD_IMAGE_COLOR);

cvtColor(rgbImageR, grayImageR, CV_BGR2GRAY);

bool isFindL, isFindR;

isFindL = findChessboardCorners(rgbImageL, boardSize, cornerL);

isFindR = findChessboardCorners(rgbImageR, boardSize, cornerR);

if (isFindL == true && isFindR == true) //如果兩幅圖像都找到了所有的角點 則說明這兩幅圖像是可行的

{

/*

Size(5,5) 搜索窗口的一半大小

Size(-1,-1) 死區的一半尺寸

TermCriteria(CV_TERMCRIT_EPS | CV_TERMCRIT_ITER, 20, 0.1)迭代終止條件

*/

cornerSubPix(grayImageL, cornerL, Size(5, 5), Size(-1, -1), TermCriteria(CV_TERMCRIT_EPS | CV_TERMCRIT_ITER, 20, 0.1));

drawChessboardCorners(rgbImageL, boardSize, cornerL, isFindL);

imshow("chessboardL", rgbImageL);

imagePointL.push_back(cornerL);

cornerSubPix(grayImageR, cornerR, Size(5, 5), Size(-1, -1), TermCriteria(CV_TERMCRIT_EPS | CV_TERMCRIT_ITER, 20, 0.1));

drawChessboardCorners(rgbImageR, boardSize, cornerR, isFindR);

imshow("chessboardR", rgbImageR);

imagePointR.push_back(cornerR);

//string filename = "res\image\calibration";

//filename += goodFrameCount + ".jpg";

//cvSaveImage(filename.c_str(), &IplImage(rgbImage)); //把合格的圖片保存起來

goodFrameCount++;

cout << "The image" << goodFrameCount << " is good" << endl;

}

else

{

cout << "The image is bad please try again" << endl;

}

if (waitKey(10) == 'q')

{

break;

}

}

/*

計算實際的校正點的三維坐標

根據實際標定格子的大小來設置

*/

calRealPoint(objRealPoint, boardWidth, boardHeight, frameNumber, squareSize);

cout << "cal real successful" << endl;

/*

標定攝像頭

由于左右攝像機分別都經過了單目標定

所以在此處選擇flag = CALIB_USE_INTRINSIC_GUESS

*/

double rms = stereoCalibrate(objRealPoint, imagePointL, imagePointR,

cameraMatrixL, distCoeffL,

cameraMatrixR, distCoeffR,

Size(imageWidth, imageHeight),R, T,E, F, CALIB_USE_INTRINSIC_GUESS,

TermCriteria(TermCriteria::COUNT + TermCriteria::EPS, 100, 1e-5)); //需要注意,應該是版本的原因,該函數最后兩個參數,我是調換過來后才顯示不出錯的

cout << "Stereo Calibration done with RMS error = " << rms << endl;

/*

立體校正的時候需要兩幅圖像共面并且行對準 以使得立體匹配更加的可靠

使得兩幅圖像共面的方法就是把兩個攝像頭的圖像投影到一個公共成像面上,這樣每幅圖像從本圖像平面投影到公共圖像平面都需要一個旋轉矩陣R

stereoRectify 這個函數計算的就是從圖像平面投影到公共成像平面的旋轉矩陣Rl,Rr。Rl,Rr即為左右相機平面行對準的校正旋轉矩陣。

左相機經過Rl旋轉,右相機經過Rr旋轉之后,兩幅圖像就已經共面并且行對準了。

其中Pl,Pr為兩個相機的投影矩陣,其作用是將3D點的坐標轉換到圖像的2D點的坐標:P*[X Y Z 1]' =[x y w]

Q矩陣為重投影矩陣,即矩陣Q可以把2維平面(圖像平面)上的點投影到3維空間的點:Q*[x y d 1] = [X Y Z W]。其中d為左右兩幅圖像的時差

*/

//對標定過的圖像進行校正

stereoRectify(cameraMatrixL, distCoeffL, cameraMatrixR, distCoeffR, imageSize, R, T, Rl, Rr, Pl, Pr, Q,

CALIB_ZERO_DISPARITY, -1, imageSize, &validROIL, &validROIR);

/*

根據stereoRectify 計算出來的R 和 P 來計算圖像的映射表 mapx,mapy

mapx,mapy這兩個映射表接下來可以給remap()函數調用,來校正圖像,使得兩幅圖像共面并且行對準

ininUndistortRectifyMap()的參數newCameraMatrix就是校正后的攝像機矩陣。在openCV里面,校正后的計算機矩陣Mrect是跟投影矩陣P一起返回的。

所以我們在這里傳入投影矩陣P,此函數可以從投影矩陣P中讀出校正后的攝像機矩陣

*/

//攝像機校正映射

initUndistortRectifyMap(cameraMatrixL, distCoeffL, Rl, Pr, imageSize, CV_32FC1, mapLx, mapLy);

initUndistortRectifyMap(cameraMatrixR, distCoeffR, Rr, Pr, imageSize, CV_32FC1, mapRx, mapRy);

Mat rectifyImageL, rectifyImageR;

cvtColor(grayImageL, rectifyImageL, CV_GRAY2BGR);

cvtColor(grayImageR, rectifyImageR, CV_GRAY2BGR);

imshow("Rectify Before", rectifyImageL);

cout << "按Q1退出 ..." << endl;

/*

經過remap之后,左右相機的圖像已經共面并且行對準了

*/

Mat rectifyImageL2, rectifyImageR2;

remap(rectifyImageL, rectifyImageL2, mapLx, mapLy, INTER_LINEAR);

remap(rectifyImageR, rectifyImageR2, mapRx, mapRy, INTER_LINEAR);

cout << "按Q2退出 ..." << endl;

imshow("rectifyImageL", rectifyImageL2);

imshow("rectifyImageR", rectifyImageR2);

/*保存并輸出數據*/

outputCameraParam();

/*

把校正結果顯示出來

把左右兩幅圖像顯示到同一個畫面上

這里只顯示了最后一副圖像的校正結果。并沒有把所有的圖像都顯示出來

*/

Mat canvas;

double sf;

int w, h;

sf = 600. / MAX(imageSize.width, imageSize.height);

w = cvRound(imageSize.width * sf);

h = cvRound(imageSize.height * sf);

canvas.create(h, w * 2, CV_8UC3);

/*左圖像畫到畫布上*/

Mat canvasPart = canvas(Rect(w * 0, 0, w, h)); //得到畫布的一部分

resize(rectifyImageL2, canvasPart, canvasPart.size(), 0, 0, INTER_AREA); //把圖像縮放到跟canvasPart一樣大小

Rect vroiL(cvRound(validROIL.x*sf), cvRound(validROIL.y*sf), //獲得被截取的區域

cvRound(validROIL.width*sf), cvRound(validROIL.height*sf));

rectangle(canvasPart, vroiL, Scalar(0, 0, 255), 3, 8); //畫上一個矩形

cout << "Painted ImageL" << endl;

/*右圖像畫到畫布上*/

canvasPart = canvas(Rect(w, 0, w, h)); //獲得畫布的另一部分

resize(rectifyImageR2, canvasPart, canvasPart.size(), 0, 0, INTER_LINEAR);

Rect vroiR(cvRound(validROIR.x * sf), cvRound(validROIR.y*sf),

cvRound(validROIR.width * sf), cvRound(validROIR.height * sf));

rectangle(canvasPart, vroiR, Scalar(0, 255, 0), 3, 8);

cout << "Painted ImageR" << endl;

/*畫上對應的線條*/

for (int i = 0; i < canvas.rows; i += 16)

line(canvas, Point(0, i), Point(canvas.cols, i), Scalar(0, 255, 0), 1, 8);

imshow("rectified", canvas);

cout << "wait key" << endl;

waitKey(0);

//system("pause");

return 0;

}

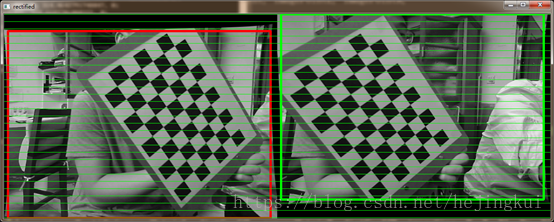

附上最后效果圖:

審核編輯 :李倩

-

攝像頭

+關注

關注

60文章

4846瀏覽量

95796 -

OpenCV

+關注

關注

31文章

635瀏覽量

41373

原文標題:opencv 雙目標定操作完整版

文章出處:【微信號:機器視覺沙龍,微信公眾號:機器視覺沙龍】歡迎添加關注!文章轉載請注明出處。

發布評論請先 登錄

相關推薦

ADC12xS10x EVM GUI安裝完成無法打開怎么解決?

【AI實戰項目】基于OpenCV的“顏色識別項目”完整操作過程

第19.1 章-星瞳科技 OpenMV視覺循跡功能 超詳細OpenMV與STM32單片機通信

自動控制原理–電子課件

opencv-python和opencv一樣嗎

opencv的主要功能有哪些

以色列人工智能目標定位系統實力如何

ez-usb_hx3pd_configuration_utility_1.0_b37_SetupOnlyPackage安裝失敗的原因?

用stm32cubemx生成一個3.1.5版本的nano工程,移植fal內核無法啟動怎么解決?

ELF 1技術貼|如何移植OpenCV

opencv雙目標定操作完整版

opencv雙目標定操作完整版

評論