您可能已經注意到,在回歸的情況下,從頭開始的實現和使用框架功能的簡潔實現非常相似。分類也是如此。由于本書中的許多模型都處理分類,因此值得添加專門支持此設置的功能。本節為分類模型提供了一個基類,以簡化以后的代碼。

import torch from d2l import torch as d2l

from mxnet import autograd, gluon, np, npx from d2l import mxnet as d2l npx.set_np()

from functools import partial import jax import optax from jax import numpy as jnp from d2l import jax as d2l

No GPU/TPU found, falling back to CPU. (Set TF_CPP_MIN_LOG_LEVEL=0 and rerun for more info.)

import tensorflow as tf from d2l import tensorflow as d2l

4.3.1. 類Classifier_

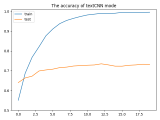

我們在下面定義Classifier類。在中,validation_step我們報告了驗證批次的損失值和分類準確度。我們為每個批次繪制一個更新num_val_batches 。這有利于在整個驗證數據上生成平均損失和準確性。如果最后一批包含的示例較少,則這些平均數并不完全正確,但我們忽略了這一微小差異以保持代碼簡單。

class Classifier(d2l.Module): #@save """The base class of classification models.""" def validation_step(self, batch): Y_hat = self(*batch[:-1]) self.plot('loss', self.loss(Y_hat, batch[-1]), train=False) self.plot('acc', self.accuracy(Y_hat, batch[-1]), train=False)

We define the Classifier class below. In the validation_step we report both the loss value and the classification accuracy on a validation batch. We draw an update for every num_val_batches batches. This has the benefit of generating the averaged loss and accuracy on the whole validation data. These average numbers are not exactly correct if the last batch contains fewer examples, but we ignore this minor difference to keep the code simple.

class Classifier(d2l.Module): #@save

"""The base class of classification models."""

def validation_step(self, batch):

Y_hat = self(*batch[:-1])

self.plot('loss', self.loss(Y_hat, batch[-1]), train=False)

self.plot('acc', self.accuracy(Y_hat, batch[-1]), train=False)

We define the Classifier class below. In the validation_step we report both the loss value and the classification accuracy on a validation batch. We draw an update for every num_val_batches batches. This has the benefit of generating the averaged loss and accuracy on the whole validation data. These average numbers are not exactly correct if the last batch contains fewer examples, but we ignore this minor difference to keep the code simple.

We also redefine the training_step method for JAX since all models that will subclass Classifier later will have a loss that returns auxiliary data. This auxiliary data can be used for models with batch normalization (to be explained in Section 8.5), while in all other cases we will make the loss also return a placeholder (empty dictionary) to represent the auxiliary data.

class Classifier(d2l.Module): #@save """The base class of classification models.""" def training_step(self, params, batch, state): # Here value is a tuple since models with BatchNorm layers require # the loss to return auxiliary data value, grads = jax.value_and_grad( self.loss, has_aux=True)(params, batch[:-1], batch[-1], state) l, _ = value self.plot("loss", l, train=True) return value, grads def validation_step(self, params, batch, state): # Discard the second returned value. It is used for training models # with BatchNorm layers since loss also returns auxiliary data l, _ = self.loss(params, batch[:-1], batch[-1], state) self.plot('loss', l, train=False) self.plot('acc', self.accuracy(params, batch[:-1], batch[-1], state), train=False)

We define the Classifier class below. In the validation_step we report both the loss value and the classification accuracy on a validation batch. We draw an update for every num_val_batches batches. This has the benefit of generating the averaged loss and accuracy on the whole validation data. These average numbers are not exactly correct if the last batch contains fewer examples, but we ignore this minor difference to keep the code simple.

class Classifier(d2l.Module): #@save

"""The base class of classification models."""

def validation_step(self, batch):

Y_hat = self(*batch[:-1])

self.plot('loss', self.loss(Y_hat, batch[-1]), train=False)

self.plot('acc', self.accuracy(Y_hat, batch[-1]), train=False)

默認情況下,我們使用隨機梯度下降優化器,在小批量上運行,就像我們在線性回歸的上下文中所做的那樣。

@d2l.add_to_class(d2l.Module) #@save def configure_optimizers(self): return torch.optim.SGD(self.parameters(), lr=self.lr)

@d2l.add_to_class(d2l.Module) #@save

def configure_optimizers(self):

params = self.parameters()

if isinstance(params, list):

return d2l.SGD(params, self.lr)

return gluon.Trainer(params, 'sgd', {'learning_rate': self.lr})

@d2l.add_to_class(d2l.Module) #@save def configure_optimizers(self): return optax.sgd(self.lr)

@d2l.add_to_class(d2l.Module) #@save def configure_optimizers(self): return tf.keras.optimizers.SGD(self.lr)

4.3.2. 準確性

給定預測概率分布y_hat,每當我們必須輸出硬預測時,我們通常會選擇預測概率最高的類別。事實上,許多應用程序需要我們做出選擇。例如,Gmail 必須將電子郵件分類為“主要”、“社交”、“更新”、“論壇”或“垃圾郵件”。它可能會在內部估計概率,但最終它必須在類別中選擇一個。

當預測與標簽 class 一致時y,它們是正確的。分類準確度是所有正確預測的分數。盡管直接優化精度可能很困難(不可微分),但它通常是我們最關心的性能指標。它通常是基準測試中的相關數量。因此,我們幾乎總是在訓練分類器時報告它。

準確度計算如下。首先,如果y_hat是一個矩陣,我們假設第二個維度存儲每個類別的預測分數。我們使用argmax每行中最大條目的索引來獲取預測類。然后我們將預測的類別與真實的元素進行比較y。由于相等運算符== 對數據類型敏感,因此我們轉換 的y_hat數據類型以匹配 的數據類型y。結果是一個包含條目 0(假)和 1(真)的張量。求和得出正確預測的數量。

@d2l.add_to_class(Classifier) #@save def accuracy(self, Y_hat, Y, averaged=True): """Compute the number of correct predictions.""" Y_hat = Y_hat.reshape((-1, Y_hat.shape[-1])) preds = Y_hat.argmax(axis=1).type(Y.dtype) compare = (preds == Y.reshape(-1)).type(torch.float32) return compare.mean() if averaged else compare

@d2l.add_to_class(Classifier) #@save

def accuracy(self, Y_hat, Y, averaged=True):

"""Compute the number of correct predictions."""

Y_hat = Y_hat.reshape((-1, Y_hat.shape[-1]))

preds = Y_hat.argmax(axis=1).astype(Y.dtype)

compare = (preds == Y.reshape(-1)).astype(np.float32)

return compare.mean() if averaged else compare

@d2l.add_to_class(d2l.Module) #@save

def get_scratch_params(self):

params = []

for attr in dir(self):

a = getattr(self, attr)

if isinstance(a, np.ndarray):

params.append(a)

if isinstance(a, d2l.Module):

params.extend(a.get_scratch_params())

return params

@d2l.add_to_class(d2l.Module) #@save

def parameters(self):

params = self.collect_params()

return params if isinstance(params, gluon.parameter.ParameterDict) and len(

params.keys()) else self.get_scratch_params()

@d2l.add_to_class(Classifier) #@save

@partial(jax.jit, static_argnums=(0, 5))

def accuracy(self, params, X, Y, state, averaged=True):

"""Compute the number of correct predictions."""

Y_hat = state.apply_fn({'params': params,

'batch_stats': state.batch_stats}, # BatchNorm Only

*X)

Y_hat = Y_hat.reshape((-1, Y_hat.shape[-1]))

preds = Y_hat.argmax(axis=1).astype(Y.dtype)

compare = (preds == Y.reshape(-1)).astype(jnp.float32)

return compare.mean() if averaged else compare

@d2l.add_to_class(Classifier) #@save def accuracy(self, Y_hat, Y, averaged=True): """Compute the number of correct predictions.""" Y_hat = tf.reshape(Y_hat, (-1, Y_hat.shape[-1])) preds = tf.cast(tf.argmax(Y_hat, axis=1), Y.dtype) compare = tf.cast(preds == tf.reshape(Y, -1), tf.float32) return tf.reduce_mean(compare) if averaged else compare

4.3.3. 概括

分類是一個足夠普遍的問題,它保證了它自己的便利功能。分類中最重要的是 分類器的準確性。請注意,雖然我們通常主要關心準確性,但出于統計和計算原因,我們訓練分類器以優化各種其他目標。然而,無論在訓練過程中哪個損失函數被最小化,有一個方便的方法來根據經驗評估我們的分類器的準確性是有用的。

4.3.4. 練習

表示為Lv驗證損失,讓Lvq是通過本節中的損失函數平均計算的快速而骯臟的估計。最后,表示為lvb最后一個小批量的損失。表達Lv按照Lvq, lvb,以及樣本和小批量大小。

表明快速而骯臟的估計Lvq是公正的。也就是說,表明E[Lv]=E[Lvq]. 為什么你還想使用Lv反而?

給定多類分類損失,表示為l(y,y′) 估計的懲罰y′當我們看到y并給出一個概率p(y∣x), 制定最佳選擇規則y′. 提示:表達預期損失,使用 l和p(y∣x).

-

pytorch

+關注

關注

2文章

808瀏覽量

13249

發布評論請先 登錄

相關推薦

Pytorch模型訓練實用PDF教程【中文】

pyhanlp文本分類與情感分析

將pytorch模型轉化為onxx模型的步驟有哪些

通過Cortex來非常方便的部署PyTorch模型

將Pytorch模型轉換為DeepViewRT模型時出錯怎么解決?

pytorch模型轉換需要注意的事項有哪些?

textCNN論文與原理——短文本分類

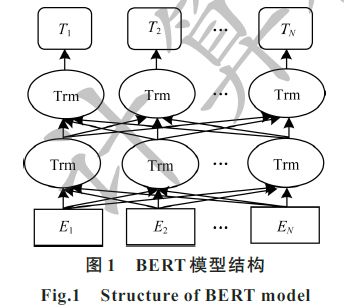

結合BERT模型的中文文本分類算法

融合文本分類和摘要的多任務學習摘要模型

PyTorch教程-4.3. 基本分類模型

PyTorch教程-4.3. 基本分類模型

評論