數(shù)據(jù)科學(xué)家Prakash Jay介紹了遷移學(xué)習(xí)的原理,基于Keras實(shí)現(xiàn)遷移學(xué)習(xí),以及遷移學(xué)習(xí)的常見(jiàn)情形。

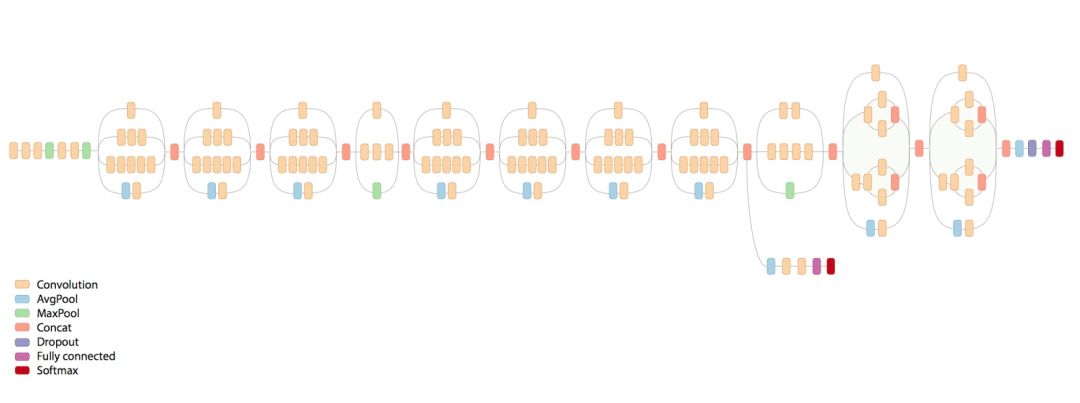

Inception-V3

什么是遷移學(xué)習(xí)?

機(jī)器學(xué)習(xí)中的遷移學(xué)習(xí)問(wèn)題,關(guān)注如何保存解決一個(gè)問(wèn)題時(shí)獲得的知識(shí),并將其應(yīng)用于另一個(gè)相關(guān)的不同問(wèn)題。

為什么遷移學(xué)習(xí)?

在實(shí)踐中,很少有人從頭訓(xùn)練一個(gè)卷積網(wǎng)絡(luò),因?yàn)楹茈y獲取足夠的數(shù)據(jù)集。使用預(yù)訓(xùn)練的網(wǎng)絡(luò)有助于解決大多數(shù)手頭的問(wèn)題。

訓(xùn)練深度網(wǎng)絡(luò)代價(jià)高昂。即使使用數(shù)百臺(tái)配備了昂貴的GPU的機(jī)器,訓(xùn)練最復(fù)雜的模型也需要好多周。

決定深度學(xué)習(xí)的拓?fù)?特色/訓(xùn)練方法/超參數(shù)是沒(méi)有多少理論指導(dǎo)的黑魔法。

我的經(jīng)驗(yàn)

不要試圖成為英雄。

—— Andrej Karapathy

我面對(duì)的大多數(shù)計(jì)算機(jī)視覺(jué)問(wèn)題沒(méi)有非常大的數(shù)據(jù)集(5000-40000圖像)。即使使用極端的數(shù)據(jù)增強(qiáng)策略,也很難達(dá)到像樣的精確度。而在少量數(shù)據(jù)集上訓(xùn)練數(shù)百萬(wàn)參數(shù)的網(wǎng)絡(luò)通常會(huì)導(dǎo)致過(guò)擬合。所以遷移學(xué)習(xí)是我的救星。

遷移學(xué)習(xí)為何有效?

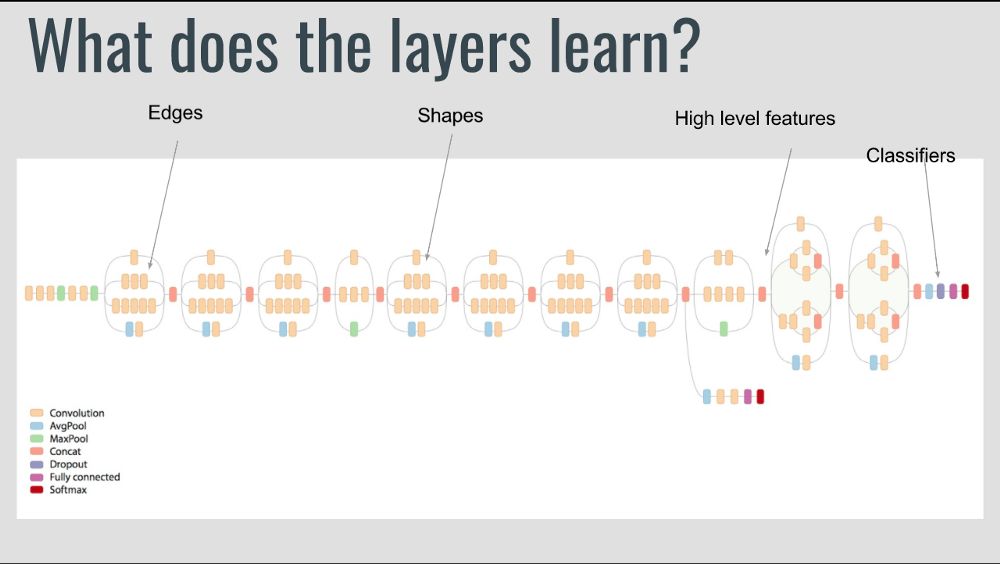

讓我們看下深度學(xué)習(xí)網(wǎng)絡(luò)學(xué)習(xí)了什么,靠前的層嘗試檢測(cè)邊緣,中間層嘗試檢測(cè)形狀,而靠后的層嘗試檢測(cè)高層數(shù)據(jù)特征。這些訓(xùn)練好的網(wǎng)絡(luò)通常有助于解決其他計(jì)算機(jī)視覺(jué)問(wèn)題。

下面,讓我們看下如何使用Keras實(shí)現(xiàn)遷移學(xué)習(xí),以及遷移學(xué)習(xí)的常見(jiàn)情形。

基于Keras的簡(jiǎn)單實(shí)現(xiàn)

from keras import applications

from keras.preprocessing.image importImageDataGenerator

from keras.models importSequential, Model

from keras.layers importDropout, Flatten, Dense, GlobalAveragePooling2D

from keras import backend as k

from keras.callbacks importModelCheckpoint, LearningRateScheduler, TensorBoard, EarlyStopping

img_width, img_height = 256, 256

train_data_dir = "data/train"

validation_data_dir = "data/val"

nb_train_samples = 4125

nb_validation_samples = 466

batch_size = 16

epochs = 50

model = applications.VGG19(weights = "imagenet", include_top=False, input_shape = (img_width, img_height, 3))

"""

層 (類型) 輸出形狀 參數(shù)數(shù)量

=================================================================

input_1 (InputLayer) (None, 256, 256, 3) 0

_________________________________________________________________

block1_conv1 (Conv2D) (None, 256, 256, 64) 1792

_________________________________________________________________

block1_conv2 (Conv2D) (None, 256, 256, 64) 36928

_________________________________________________________________

block1_pool (MaxPooling2D) (None, 128, 128, 64) 0

_________________________________________________________________

block2_conv1 (Conv2D) (None, 128, 128, 128) 73856

_________________________________________________________________

block2_conv2 (Conv2D) (None, 128, 128, 128) 147584

_________________________________________________________________

block2_pool (MaxPooling2D) (None, 64, 64, 128) 0

_________________________________________________________________

block3_conv1 (Conv2D) (None, 64, 64, 256) 295168

_________________________________________________________________

block3_conv2 (Conv2D) (None, 64, 64, 256) 590080

_________________________________________________________________

block3_conv3 (Conv2D) (None, 64, 64, 256) 590080

_________________________________________________________________

block3_conv4 (Conv2D) (None, 64, 64, 256) 590080

_________________________________________________________________

block3_pool (MaxPooling2D) (None, 32, 32, 256) 0

_________________________________________________________________

block4_conv1 (Conv2D) (None, 32, 32, 512) 1180160

_________________________________________________________________

block4_conv2 (Conv2D) (None, 32, 32, 512) 2359808

_________________________________________________________________

block4_conv3 (Conv2D) (None, 32, 32, 512) 2359808

_________________________________________________________________

block4_conv4 (Conv2D) (None, 32, 32, 512) 2359808

_________________________________________________________________

block4_pool (MaxPooling2D) (None, 16, 16, 512) 0

_________________________________________________________________

block5_conv1 (Conv2D) (None, 16, 16, 512) 2359808

_________________________________________________________________

block5_conv2 (Conv2D) (None, 16, 16, 512) 2359808

_________________________________________________________________

block5_conv3 (Conv2D) (None, 16, 16, 512) 2359808

_________________________________________________________________

block5_conv4 (Conv2D) (None, 16, 16, 512) 2359808

_________________________________________________________________

block5_pool (MaxPooling2D) (None, 8, 8, 512) 0

=================================================================

總參數(shù): 20,024,384.0

可訓(xùn)練參數(shù): 20,024,384.0

不可訓(xùn)練參數(shù): 0.0

"""

# 凍結(jié)不打算訓(xùn)練的層。這里我凍結(jié)了前5層。

for layer in model.layers[:5]:

layer.trainable = False

# 增加定制層

x = model.output

x = Flatten()(x)

x = Dense(1024, activation="relu")(x)

x = Dropout(0.5)(x)

x = Dense(1024, activation="relu")(x)

predictions = Dense(16, activation="softmax")(x)

# 創(chuàng)建最終模型

model_final = Model(input = model.input, output = predictions)

# 編譯最終模型

model_final.compile(loss = "categorical_crossentropy", optimizer = optimizers.SGD(lr=0.0001, momentum=0.9), metrics=["accuracy"])

# 數(shù)據(jù)增強(qiáng)

train_datagen = ImageDataGenerator(

rescale = 1./255,

horizontal_flip = True,

fill_mode = "nearest",

zoom_range = 0.3,

width_shift_range = 0.3,

height_shift_range=0.3,

rotation_range=30)

test_datagen = ImageDataGenerator(

rescale = 1./255,

horizontal_flip = True,

fill_mode = "nearest",

zoom_range = 0.3,

width_shift_range = 0.3,

height_shift_range=0.3,

rotation_range=30)

train_generator = train_datagen.flow_from_directory(

train_data_dir,

target_size = (img_height, img_width),

batch_size = batch_size,

class_mode = "categorical")

validation_generator = test_datagen.flow_from_directory(

validation_data_dir,

target_size = (img_height, img_width),

class_mode = "categorical")

# 保存模型

checkpoint = ModelCheckpoint("vgg16_1.h5", monitor='val_acc', verbose=1, save_best_only=True, save_weights_only=False, mode='auto', period=1)

early = EarlyStopping(monitor='val_acc', min_delta=0, patience=10, verbose=1, mode='auto')

# 訓(xùn)練模型

model_final.fit_generator(

train_generator,

samples_per_epoch = nb_train_samples,

epochs = epochs,

validation_data = validation_generator,

nb_val_samples = nb_validation_samples,

callbacks = [checkpoint, early])

遷移學(xué)習(xí)的常見(jiàn)情形

別忘了,靠前的層中的卷積特征更通用,靠后的層中的卷積特征更針對(duì)原本的數(shù)據(jù)集。遷移學(xué)習(xí)有4種主要場(chǎng)景:

1. 新數(shù)據(jù)集較小,和原數(shù)據(jù)集相似

如果我們嘗試訓(xùn)練整個(gè)網(wǎng)絡(luò),容易導(dǎo)致過(guò)擬合。由于新數(shù)據(jù)和原數(shù)據(jù)相似,因此我們期望卷積網(wǎng)絡(luò)中的高層特征和新數(shù)據(jù)集相關(guān)。因此,建議凍結(jié)所有卷積層,只訓(xùn)練分類器(比如,線性分類器):

for layer in model.layers:

layer.trainable = False

2. 新數(shù)據(jù)集較大,和原數(shù)據(jù)集相似

由于我們有更多數(shù)據(jù),我們更有自信,如果嘗試對(duì)整個(gè)網(wǎng)絡(luò)進(jìn)行精細(xì)調(diào)整,不會(huì)導(dǎo)致過(guò)擬合。

for layer in model.layers:

layer.trainable = True

其實(shí)默認(rèn)值就是True,上面的代碼明確指定所有層可訓(xùn)練,是為了更清楚地強(qiáng)調(diào)這一點(diǎn)。

由于開(kāi)始的幾層檢測(cè)邊緣,你也可以選擇凍結(jié)這些層。比如,以下代碼凍結(jié)VGG19的前5層:

for layer in model.layers[:5]:

layer.trainable = False

3. 新數(shù)據(jù)集很小,但和原數(shù)據(jù)很不一樣

由于數(shù)據(jù)集很小,我們大概想要從靠前的層提取特征,然后在此之上訓(xùn)練一個(gè)分類器:(假定你對(duì)h5py有所了解)

from keras import applications

from keras.preprocessing.image importImageDataGenerator

from keras import optimizers

from keras.models importSequential, Model

from keras.layers importDropout, Flatten, Dense, GlobalAveragePooling2D

from keras import backend as k

from keras.callbacks importModelCheckpoint, LearningRateScheduler, TensorBoard, EarlyStopping

img_width, img_height = 256, 256

### 創(chuàng)建網(wǎng)絡(luò)

img_input = Input(shape=(256, 256, 3))

x = Conv2D(64, (3, 3), activation='relu', padding='same', name='block1_conv1')(img_input)

x = Conv2D(64, (3, 3), activation='relu', padding='same', name='block1_conv2')(x)

x = MaxPooling2D((2, 2), strides=(2, 2), name='block1_pool')(x)

# 塊2

x = Conv2D(128, (3, 3), activation='relu', padding='same', name='block2_conv1')(x)

x = Conv2D(128, (3, 3), activation='relu', padding='same', name='block2_conv2')(x)

x = MaxPooling2D((2, 2), strides=(2, 2), name='block2_pool')(x)

model = Model(input = img_input, output = x)

model.summary()

"""

_________________________________________________________________

層 (類型) 輸出形狀 參數(shù)數(shù)量

=================================================================

input_1 (InputLayer) (None, 256, 256, 3) 0

_________________________________________________________________

block1_conv1 (Conv2D) (None, 256, 256, 64) 1792

_________________________________________________________________

block1_conv2 (Conv2D) (None, 256, 256, 64) 36928

_________________________________________________________________

block1_pool (MaxPooling2D) (None, 128, 128, 64) 0

_________________________________________________________________

block2_conv1 (Conv2D) (None, 128, 128, 128) 73856

_________________________________________________________________

block2_conv2 (Conv2D) (None, 128, 128, 128) 147584

_________________________________________________________________

block2_pool (MaxPooling2D) (None, 64, 64, 128) 0

=================================================================

總參數(shù):260,160.0

可訓(xùn)練參數(shù):260,160.0

不可訓(xùn)練參數(shù):0.0

"""

layer_dict = dict([(layer.name, layer) for layer in model.layers])

[layer.name for layer in model.layers]

"""

['input_1',

'block1_conv1',

'block1_conv2',

'block1_pool',

'block2_conv1',

'block2_conv2',

'block2_pool']

"""

import h5py

weights_path = 'vgg19_weights.h5'# ('https://github.com/fchollet/deep-learning-models/releases/download/v0.1/vgg19_weights_tf_dim_ordering_tf_kernels.h5)

f = h5py.File(weights_path)

list(f["model_weights"].keys())

"""

['block1_conv1',

'block1_conv2',

'block1_pool',

'block2_conv1',

'block2_conv2',

'block2_pool',

'block3_conv1',

'block3_conv2',

'block3_conv3',

'block3_conv4',

'block3_pool',

'block4_conv1',

'block4_conv2',

'block4_conv3',

'block4_conv4',

'block4_pool',

'block5_conv1',

'block5_conv2',

'block5_conv3',

'block5_conv4',

'block5_pool',

'dense_1',

'dense_2',

'dense_3',

'dropout_1',

'global_average_pooling2d_1',

'input_1']

"""

# 列出模型中的所有層的名稱

layer_names = [layer.name for layer in model.layers]

"""

# 提取`.h5`文件中每層的模型權(quán)重

>>> f["model_weights"]["block1_conv1"].attrs["weight_names"]

array([b'block1_conv1/kernel:0', b'block1_conv1/bias:0'],

dtype='|S21')

# 將這一數(shù)組分配給weight_names

>>> f["model_weights"]["block1_conv1"]["block1_conv1/kernel:0]

# 列表推導(dǎo)(weights)儲(chǔ)存層的權(quán)重和偏置

>>>layer_names.index("block1_conv1")

1

>>> model.layers[1].set_weights(weights)

# 為特定層設(shè)置權(quán)重。

使用for循環(huán)我們可以為整個(gè)網(wǎng)絡(luò)設(shè)置權(quán)重。

"""

for i in layer_dict.keys():

weight_names = f["model_weights"][i].attrs["weight_names"]

weights = [f["model_weights"][i][j] for j in weight_names]

index = layer_names.index(i)

model.layers[index].set_weights(weights)

import cv2

import numpy as np

import pandas as pd

from tqdm import tqdm

import itertools

import glob

features = []

for i in tqdm(files_location):

im = cv2.imread(i)

im = cv2.resize(cv2.cvtColor(im, cv2.COLOR_BGR2RGB), (256, 256)).astype(np.float32) / 255.0

im = np.expand_dims(im, axis =0)

outcome = model_final.predict(im)

features.append(outcome)

## 收集這些特征,創(chuàng)建一個(gè)dataframe,在其上訓(xùn)練一個(gè)分類器

以上代碼提取block2_pool特征。通常而言,由于這層有64 x 64 x 128特征,在其上訓(xùn)練一個(gè)分類器可能于事無(wú)補(bǔ)。我們可以加上一些全連接層,然后在其基礎(chǔ)上訓(xùn)練神經(jīng)網(wǎng)絡(luò)。

增加少量全連接層和一個(gè)輸出層。

為靠前的層設(shè)置權(quán)重,然后凍結(jié)。

訓(xùn)練網(wǎng)絡(luò)。

4. 新數(shù)據(jù)集很大,和原數(shù)據(jù)很不一樣

由于你有一個(gè)很大的數(shù)據(jù)集,你可以設(shè)計(jì)你自己的網(wǎng)絡(luò),或者使用現(xiàn)有的網(wǎng)絡(luò)。

你可以基于隨機(jī)初始化權(quán)重或預(yù)訓(xùn)練網(wǎng)絡(luò)權(quán)重初始化訓(xùn)練網(wǎng)絡(luò)。一般選擇后者。

你可以使用不同的網(wǎng)絡(luò),或者基于現(xiàn)有網(wǎng)絡(luò)做些改動(dòng)。

-

機(jī)器學(xué)習(xí)

+關(guān)注

關(guān)注

66文章

8506瀏覽量

134720 -

深度學(xué)習(xí)

+關(guān)注

關(guān)注

73文章

5561瀏覽量

122838

原文標(biāo)題:基于Keras進(jìn)行遷移學(xué)習(xí)

文章出處:【微信號(hào):jqr_AI,微信公眾號(hào):論智】歡迎添加關(guān)注!文章轉(zhuǎn)載請(qǐng)注明出處。

發(fā)布評(píng)論請(qǐng)先 登錄

什么是遷移學(xué)習(xí)?遷移學(xué)習(xí)的實(shí)現(xiàn)方法與工具分析

遷移學(xué)習(xí)訓(xùn)練網(wǎng)絡(luò)

【木棉花】學(xué)習(xí)筆記--分布式遷移

【木棉花】學(xué)習(xí)筆記--分布式遷移+回遷

遷移學(xué)習(xí)

基于局部分類精度的多源在線遷移學(xué)習(xí)算法

機(jī)器學(xué)習(xí)方法遷移學(xué)習(xí)的發(fā)展和研究資料說(shuō)明

基于脈沖神經(jīng)網(wǎng)絡(luò)的遷移學(xué)習(xí)算法

基于遷移學(xué)習(xí)與圖像增強(qiáng)的夜間航拍車(chē)輛識(shí)別

基于WordNet模型的遷移學(xué)習(xí)文本特征對(duì)齊算法

基于遷移深度學(xué)習(xí)的雷達(dá)信號(hào)分選識(shí)別

一文詳解遷移學(xué)習(xí)

視覺(jué)深度學(xué)習(xí)遷移學(xué)習(xí)訓(xùn)練框架Torchvision介紹

評(píng)論