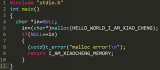

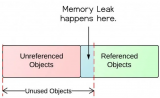

? ? ?在實際的項目中,最難纏的問題就是內存泄漏,當然還有panic之類的,內存泄漏分為兩部分用戶空間的和內核空間的.我們就分別從這兩個層面分析一下.

? ? ?用戶空間查看內存泄漏和解決都相對簡單。定位問題的方法和工具也很多相對容易.我們來看看.

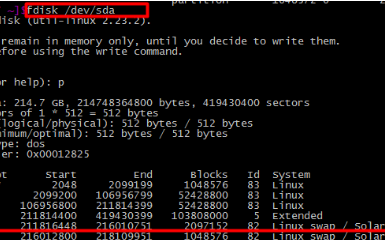

? ? 1. 查看內存信息

? ? ?cat /proc/meminfo、free、cat /proc/slabinfo等

? ? 2.? 查看進程的狀態信息

? ? top、ps、cat /proc/pid/maps/status/fd等

? ?通常我們定位問題先在shell下ps查看當前運行進程的狀態,嵌入式上可能顯示的信息會少一些.

點擊(此處)折疊或打開

root@hos-machine:~#?ps?-uaxw

USER PID %CPU %MEM VSZ RSS TTY STAT?START?TIME COMMAND

root 1 0.0 0.1 119872 3328???Ss 8月10 0:24?/sbin/init splash

root 2 0.0 0.0 0 0???S?8月10 0:00?[kthreadd]

root 3 0.0 0.0 0 0???S?8月10 0:44?[ksoftirqd/0]

root 5 0.0 0.0 0 0???S

root 7 0.0 0.0 0 0???S?8月10 3:50?[rcu_sched]

root 8 0.0 0.0 0 0???S?8月10 0:00?[rcu_bh]

root 9 0.0 0.0 0 0???S?8月10 0:12?[migration/0]

root 10 0.0 0.0 0 0???S?8月10 0:01?[watchdog/0]

root 11 0.0 0.0 0 0???S?8月10 0:01?[watchdog/1]

root 12 0.0 0.0 0 0???S?8月10 0:12?[migration/1]

root 13 0.0 0.0 0 0???S?8月10 1:18?[ksoftirqd/1]

root 15 0.0 0.0 0 0???S

root 16 0.0 0.0 0 0???S?8月10 0:01?[watchdog/2]

root 17 0.0 0.0 0 0???S?8月10 0:12?[migration/2]

root 18 0.0 0.0 0 0???S?8月10 1:19?[ksoftirqd/2]

root 20 0.0 0.0 0 0???S

root 21 0.0 0.0 0 0???S?8月10 0:01?[watchdog/3]

root 22 0.0 0.0 0 0???S?8月10 0:13?[migration/3]

root 23 0.0 0.0 0 0???S?8月10 0:41?[ksoftirqd/3]

root 25 0.0 0.0 0 0???S

root 26 0.0 0.0 0 0???S?8月10 0:00?[kdevtmpfs]

root 27 0.0 0.0 0 0???S

root 329 0.0 0.0 0 0???S

root 339 0.0 0.0 0 0???S

root 343 0.0 0.0 0 0???S

root 368 0.0 0.0 39076 1172???Ss 8月10 0:10?/lib/systemd/systemd-journald

root 373 0.0 0.0 0 0???S?8月10 0:00?[kauditd]

root 403 0.0 0.0 45772 48???Ss 8月10 0:01?/lib/systemd/systemd-udevd

root 444 0.0 0.0 0 0???S

systemd+?778 0.0 0.0 102384 516???Ssl 8月10 0:04?/lib/systemd/systemd-timesyncd

root 963 0.0 0.0 191264 8???Ssl 8月10 0:00?/usr/bin/vmhgfs-fuse?-o subtype=vmhgfs-fuse,allow_other?/mnt/hgfs

root 987 9.6 0.0 917024 0???Ssl 8月10 416:08?/usr/sbin/vmware-vmblock-fuse?-o subtype=vmware-vmblock,default_permi

root 1007 0.2 0.1 162728 3084???Sl 8月10 10:14?/usr/sbin/vmtoolsd

root 1036 0.0 0.0 56880 844???S?8月10 0:00?/usr/lib/vmware-vgauth/VGAuthService?-s

root 1094 0.0 0.0 203216 388???Sl 8月10 1:48?./ManagementAgentHost

root 1100 0.0 0.0 28660 136???Ss 8月10 0:02?/lib/systemd/systemd-logind

message+?1101 0.0 0.1 44388 2608???Ss 8月10 0:21?/usr/bin/dbus-daemon?--system?--address=systemd:?--nofork?--nopidfile

root 1110 0.0 0.0 173476 232???Ssl 8月10 0:54?/usr/sbin/thermald?--no-daemon?--dbus-enable

root 1115 0.0 0.0 4400 28???Ss 8月10 0:14?/usr/sbin/acpid

root 1117 0.0 0.0 36076 568???Ss 8月10 0:01?/usr/sbin/cron?-f

root 1133 0.0 0.0 337316 976???Ssl 8月10 0:00?/usr/sbin/ModemManager

root 1135 0.0 0.2 634036 5340???Ssl 8月10 0:19?/usr/lib/snapd/snapd

root 1137 0.0 0.0 282944 392???Ssl 8月10 0:06?/usr/lib/accountsservice/accounts-daemon

syslog 1139 0.0 0.0 256396 352???Ssl 8月10 0:04?/usr/sbin/rsyslogd?-n

avahi 1145 0.0 0.0 44900 1092???Ss 8月10 0:11 avahi-daemon:?running?[hos-machine.local]

這個是ubuntu系統里的信息比較詳細,我們可以很清晰看到VMZ和RSS的對比信息.VMZ就是這個進程申請的虛擬地址空間,而RSS是這個進程占用的實際物理內存空間.

通常一個進程如果有內存泄露VMZ會不斷增大,相對的物理內存也會增加,如果是這樣一般需要檢查malloc/free是否匹配。根據進程ID我們可以查看詳細的VMZ相關的信息。例:

點擊(此處)折疊或打開

root@hos-machine:~#?cat?/proc/1298/status?

Name:????sshd

State:????S?(sleeping)

Tgid:????1298

Ngid:????0

Pid:????1298

PPid:????1

TracerPid:????0

Uid:????0????0????0????0

Gid:????0????0????0????0

FDSize:????128

Groups:????

NStgid:????1298

NSpid:????1298

NSpgid:????1298

NSsid:????1298

VmPeak:???? 65620 kB

VmSize:???? 65520 kB

VmLck:???? 0 kB

VmPin:???? 0 kB

VmHWM:???? 5480 kB

VmRSS:???? 5452 kB

VmData:???? 580 kB

VmStk:???? 136 kB

VmExe:???? 764 kB

VmLib:???? 8316 kB

VmPTE:???? 148 kB

VmPMD:???? 12 kB

VmSwap:???? 0 kB

HugetlbPages:???? 0 kB

Threads:????1

SigQ:????0/7814

SigPnd:????0000000000000000

ShdPnd:????0000000000000000

SigBlk:????0000000000000000

SigIgn:????0000000000001000

SigCgt:????0000000180014005

CapInh:????0000000000000000

CapPrm:????0000003fffffffff

CapEff:????0000003fffffffff

CapBnd:????0000003fffffffff

CapAmb:????0000000000000000

Seccomp:????0

Cpus_allowed:????ffffffff,ffffffff

Cpus_allowed_list:????0-63

Mems_allowed:????00000000,00000001

Mems_allowed_list:????0

voluntary_ctxt_switches:????1307

nonvoluntary_ctxt_switches:????203

如果我們想查看這個進程打開了多少文件可以

?ls -l /proc/1298/fd/* | wc

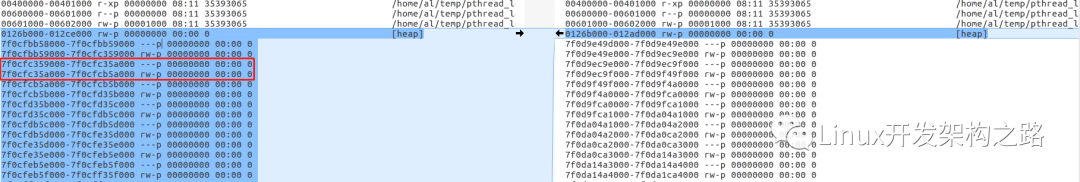

查看進程詳細的內存映射信息

cat /proc/7393/maps

我們看一下meminfo各個注釋:參考documentation/filesystem/proc.txt?

MemTotal:?Total usable ram?(i.e.?physical ram minus a few reserved bits?and?the kernel binary code)?

MemFree:?The sum?of?LowFree+HighFree

Buffers:?Relatively temporary storage?for?raw disk blocks shouldn't get tremendously large (20MB or so)

Cached: in-memory cache for files read from the disk (the pagecache). Doesn't?include?

SwapCached SwapCached:?Memory that once was swapped?out,?is swapped back?in?but still also is?in?the swapfile?(if?memory is needed it

doesn't need to be swapped out AGAIN because it is already in the swapfile. This saves I/O)

Active: Memory that has been used more recently and usually not reclaimed unless absolutely necessary.?

Inactive: Memory which has been less recently used. It is more eligible to be reclaimed for other purposes?

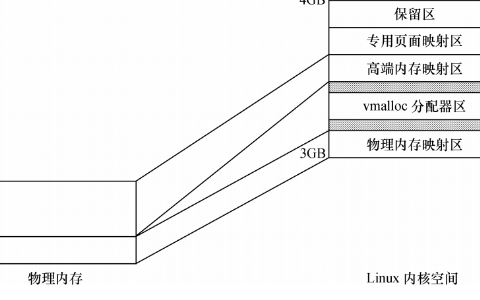

HighTotal:?

HighFree: Highmem is all memory above ~860MB of physical memory Highmem areas are for use by userspace programs, or

for the pagecache. The kernel must use tricks to access this memory, making it slower to access than lowmem.

LowTotal:

LowFree: Lowmem is memory which can be used for everything that highmem can be used for, but it is also available for the

kernel's use?for?its own data structures.?Among many other things,?it is where everything from the Slab is

allocated.?Bad things happen when you're out of lowmem.?

SwapTotal: total amount of swap space available

SwapFree: Memory which has been evicted from RAM, and is temporarily on the disk?

Dirty: Memory which is waiting to get written back to the disk?

Writeback: Memory which is actively being written back to the disk

AnonPages: Non-file backed pages mapped into userspace page tables

AnonHugePages: Non-file backed huge pages mapped into userspace page tables?

Mapped: files which have been mmaped, such as libraries?

Slab: in-kernel data structures cache

SReclaimable: Part of Slab, that might be reclaimed, such as caches

SUnreclaim: Part of Slab, that cannot be reclaimed on memory pressure?

PageTables: amount of memory dedicated to the lowest level of page tables.?

NFS_Unstable: NFS pages sent to the server, but not yet committed to stable storage?

Bounce: Memory used for block device "bounce buffers"?

WritebackTmp: Memory used by FUSE for temporary writeback buffers?

CommitLimit: Based on the overcommit ratio ('vm.overcommit_ratio'), this is the total amount of memory currently available to

be allocated on the system. This limit is only adhered to if strict overcommit accounting is enabled (mode 2 in

'vm.overcommit_memory').

The CommitLimit is calculated with the following formula: CommitLimit = ('vm.overcommit_ratio' * Physical RAM) + Swap

For example, on a system with 1G of physical RAM and 7G

of swap with a `vm.overcommit_ratio` of 30 it would

yield a CommitLimit of 7.3G.

For more details, see the memory overcommit documentation in vm/overcommit-accounting.

Committed_AS: The amount of memory presently allocated on the system. The committed memory is a sum of all of the memory which

has been allocated by processes, even if it has not been

"used" by them as of yet. A process which malloc()'s 1G

of?memory,?but only touches 300M?of?it will only show up as using 300M?of?memory even?if?it has the address space

allocated?for?the entire 1G.?This?1G is memory which has been?"committed"?to by the?VM?and?can be used at any time

by the allocating application.?With strict overcommit enabled on the system?(mode 2?in?'vm.overcommit_memory'),

allocations which would exceed the CommitLimit?(detailed above)?will?not?be permitted.?This?is useful?if?one needs

to guarantee that processes will?not?fail due to lack?of?memory once that memory has been successfully allocated.?

VmallocTotal:?total?size?of?vmalloc memory area

VmallocUsed:?amount?of?vmalloc area which is used?

VmallocChunk:?largest contiguous block?of?vmalloc area which is free

我們只需要關注幾項就ok. ?buffers/cache/slab/active/anonpages

Active= Active(anon) + Active(file) ? ?(同樣Inactive)

AnonPages: Non-file backed pages mapped into userspace page tables

buffers和cache的區別注釋說的很清楚了.

有時候不是內存泄露,同樣也會讓系統崩潰,比如cache、buffers等占用的太多,打開太多文件,而等待系統自動回收是一個非常漫長的過程.

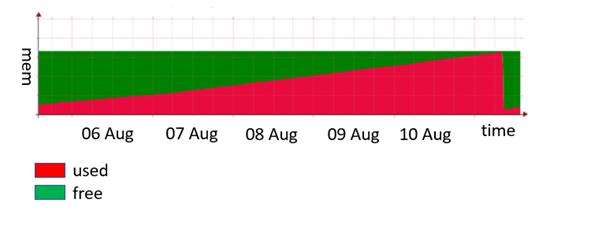

從proc目錄下的meminfo文件了解到當前系統內存的使用情況匯總,其中可用的物理內存=memfree+buffers+cached,當memfree不夠時,內核會通過

回寫機制(pdflush線程)把cached和buffered內存回寫到后備存儲器,從而釋放相關內存供進程使用,或者通過手動方式顯式釋放cache內存

點擊(此處)折疊或打開

drop_caches

Writing to?this?will cause the kernel to drop clean caches,?dentries?and?inodes from memory,?causing that memory to become free.

To free pagecache:

echo?1?>?/proc/sys/vm/drop_caches?

To free dentries?and?inodes:?

echo?2?>?/proc/sys/vm/drop_caches

To free pagecache,?dentries?and?inodes:?

echo?3?>?/proc/sys/vm/drop_caches

As?this?is a non-destructive operation?and?dirty objects are?not?freeable,?the user should run `sync`first

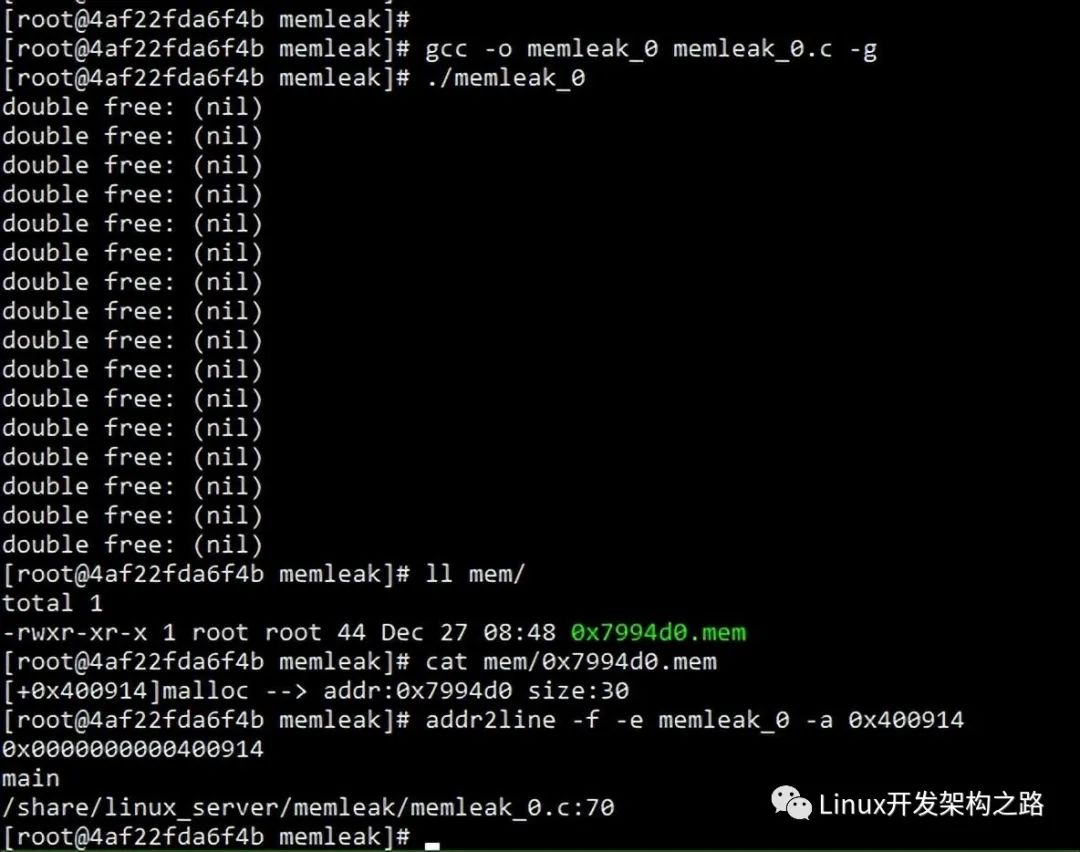

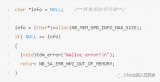

用戶空間內存檢測也可以通過mtrace來檢測用法也非常簡單,之前文章我們有提到過. 包括比較有名的工具valgrind、以及dmalloc、memwatch等.各有特點.

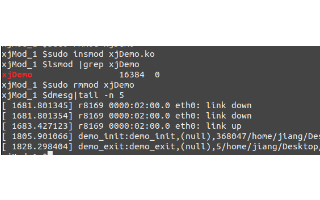

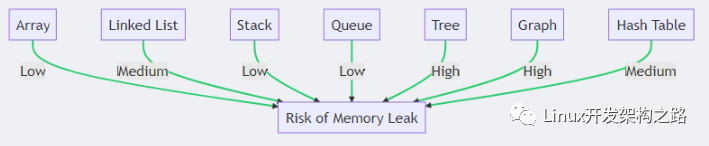

? ?內核內存泄露的定位比較復雜,先判斷是否是內核泄露了,然后在具體定位什么操作,然后再排查一些可疑的模塊,內核內存操作基本都是kmalloc

即通過slab/slub/slob機制,所以如果meminfo里slab一直增長那么很有可能是內核的問題.我們可以更加詳細的查看slab信息

cat /proc/slabinfo?

如果支持slabtop更好,基本可以判斷內核是否有內存泄漏,并且是在操作什么對象的時候發生的。

點擊(此處)折疊或打開

cat /proc/slabinfo?

slabinfo?-?version:?2.1

#?name??????:?tunables????:?slabdata??

fuse_request 0 0 288 28 2?:?tunables 0 0 0?:?slabdata 0 0 0

fuse_inode 0 0 448 18 2?:?tunables 0 0 0?:?slabdata 0 0 0

fat_inode_cache 0 0 424 19 2?:?tunables 0 0 0?:?slabdata 0 0 0

fat_cache 0 0 24 170 1?:?tunables 0 0 0?:?slabdata 0 0 0

在內核的配置中里面已經支持了一部分memleak自動檢查的選項,可以打開來進行跟蹤調試.

這里沒有深入的東西,算是拋磚引玉吧~.

?

電子發燒友App

電子發燒友App

評論